Information Is the Fundamental Reality…

...and Information Theory follows the same scheme as the Trinity/Creation

If all that exists is the infinite Trinity and he is a Mind that chose to conceive and thereby bring Creation into being, we might expect that there would be evidence of this truth in the universe around us. We would expect to see signs of our reality as not just being physical stuff. There would be evidence that reality is in its essence “mental,” i.e., that we live in a world consisting of ideas. John Archibald Wheeler1 was a 20th century physicist who helped conceive the atomic bomb, novel approaches to unified field theory, and popularized the term “black hole.” Wheeler commented that his career could be divided into three stages. In the first stage when he was solving nuclear reactor problems, he said “Everything is particles”. In the second stage, when he was resurrecting general relativity for cosmology, he said “Everything is waves.” And in his last phase of life, when he was exploring the foundations of quantum mechanics, he said “Everything is information.”2 Wheeler also famously coined the phrase, “it from bit,” a shorthand way of expressing “that the physical world has at bottom — at a very deep bottom, in most instances — an immaterial source and explanation; that what we call reality arises in the last analysis from the posing of yes-no questions…in short, that all things physical are information-theoretic in origin and this is a participatory universe.”34

“Information” has already been mentioned several times in previous posts. The universe is drenched with it, may even be described as being it. What exactly is information? How is it defined? The textbook definition of information is the communication or reception of knowledge or intelligence. When we think about Information, what we quickly realize is that it isn’t really of any use nor is it really “information” if what is being communicated is no better than random chance or if it conveys something that is already known or expected. A weather forecast that is derived from a coin toss isn’t very predictive or useful, nor is telling someone what the weather is at the present moment where they are standing. In other words, telling someone something completely random or something they already know isn’t really conveying information at all. Information must be “newsworthy.” For example, to say that we live on planet Earth isn’t very informative. To say that the Earth is crashing into the sun later today is much more interesting. The former statement doesn’t contain much information, while the latter is newsworthy and therefore informative. Also, because the news is unexpected and unlikely, in mathematical terms the statement can be thought of as being of low probability. (That is different than saying that the statement is true or untrue. Probability and truth are different concepts. Low probability events end up being true all of the time, even though they were of low probability and unlikely.5)

Following this logic, we can see that completely random statements and statements that are the result of known natural laws both contain very little information. The first is just a wild guess and the second is exactly what is expected. Neither is newsworthy. Waves washing on to a beach can form lines and shapes in the sand. They may be intricate and beautiful, but they are completely expected based upon the working of natural laws. Those lines provide no information. However, if the lines on the sand form the word “HELP,” those lines would contain a significant amount of information.

Therefore, because high information statements like the word “HELP” are very unrandom and highly unlikely to be the products of random chance or the regular workings of natural laws, they always end up being the products of an intelligent mind. This kind of logic is the foundational principle of forensic fields such as criminology or archaeology. The knife found in a man’s back is more likely to be caused by a burglar than by a gust of wind. The pyramids at Giza are more likely formed by skilled workers than by erosion.

“The communication or reception of knowledge or intelligence” is the activity of the persons of the Trinity. It is the work they do. Within the Trinity, there is a constant exchange of intelligence, of information, even of personhood itself. Their communication is not white noise or vague emotions. As expressions of an infinitely intelligent Mind, the Trinity’s self-communication is of infinite information. It is not random, nor is it routine and expected. Since Creation is the product of an immense Mind, we can readily understand why Creation contains such a staggering amount of information. For example, the Big Bang alone, a unique and unprecedented ex nihilo creation event, is staggeringly “newsworthy.” By the principles of information theory, that simple fact alone makes it impossible that it was a random event or caused by the normal working of natural laws, for there were no events or pre-existing natural laws before this event. There was nothing and then surprise!

Modern physics is coming to the conclusion that ideas and information is all there is. There is really no such thing as material “stuff.” A way to explain this is by an analogy with the modern computer. When we interact with one, we see a screen, a keyboard, etc. We know that it contains information in the form of software and data files. As a matter of fact, depending upon the size of the computer, it could contain a significant portion of all the information held in the Library of Congress or even more. However, regardless of how much information the hardware of the computer “contains,” it weighs no more and no less than when it was a blank machine fresh off the factory floor. Clearly, information doesn’t weigh anything and has no physical existence, though it can be “stored” by the specific arrangement of physical things such as electrons on memory chips or ink on a page. But what about those things that store non-physical information, what about the physical components of the computer itself? The design of the computer’s physical parts is what makes a computer what it is. Plastic and metal are not a computer, they are just blank materials, but when a mind adds information to those physical materials and thereby shapes and configures them into useful computer components, information has been transferred and stored into those formerly blank materials.

However, why are certain materials chosen by the intelligent minds of computer engineers to make computer components? The answer is because of the characteristics of those materials. What are characteristics? They are ideas and information. If a material is magnetic or conducts electricity, then it is not inert. It is a certain thing and not the many other things that it could be. Those “characteristics,” or “information,” of a material can be put to good use in a machine by clever minds. But what makes up a particular material and gives it its properties? It is the particular molecular makeup of that material. Once again, the molecules have certain qualities but not others. But, it doesn’t stop there. The atoms that make up the molecules also have their own particular properties. Finally, the atoms follow physical laws, all of which are ideas and information, certain characteristics and concepts, but not others.

We recognize from daily experience that the only place where information, a non-material thing, truly “exists” is in non-material minds. For example, the information in music can be stored in many different types of media such as CDs, hard drives, magnetic tapes, analog records, and even the neurons in physical brains. In addition, the same information can be stored in many different copies of CDs or brains, yet the “information” remains the same. We intuitively understand that the information and the media embodying it are two different things. So, if there were no “media” or “material world,” could information still exist? Most people would say yes. Information has an independent reality separate from any physical “storage” medium including the physical brain. It exists “out there” on its own wherever and whatever that “out there” is.6

Our predecessor humans may have lived in a “Stone Age,” a “Bronze Age,” an “Iron Age,” a “Steam Age,” or even a “Space Age.” These eras were marked by advances in materials science and certain physical technologies. We now live in a “Silicon Age” of computers, but what makes our age different is not “silicon” itself, which is nothing but sand, but rather the means that we have developed to manipulate information on a scale never before imagined. We have turned abundant sand into information processors. Our ability to not just store and replicate, but manipulate information is unmatched in the history of the world. Information has become a quotidian commercial product. That is new.

Our “Silicon Age” is really an “Information Age,” which is made possible by information theory pioneered by Bell Labs scientist Claude Shannon.7 Wikipedia provides a good definition:

Information theory studies the transmission, processing, extraction, and utilization of information. Abstractly, information can be thought of as the resolution of uncertainty. In the case of communication of information over a noisy channel, this abstract concept was made concrete in 1948 by Claude Shannon in his paper "A Mathematical Model of Communication”8 in which "information" is thought of as a set of possible messages, where the goal is to send these messages over a noisy channel, and then to have the receiver reconstruct the message with low probability of error, in spite of the channel noise.9

Information theory is critical to the modern world because it allows for the efficient and compact transmission of multiple messages across a single channel. Instead of a single telephone wire connecting two telephones carrying a single conversation, Shannon’s information theory provides the intellectual framework to design systems where multiple messages can be sent across the same wire (or channel) and for the messages to be properly separated and decoded once they reach their destination. Information theory provides a way to ungarble multiple messages. Multiple “conversations” can occur simultaneously on the same “telephone wire,” providing exponential increases in throughput and productivity.

Since “information” is related to probability (as the communication of the unlikely), the amount of information in a message or an event can be quantified by measuring the uncertainty in the outcome. In other words, it can be measured by determining the probability of an outcome compared with the probability of an outcome had that thing simply occurred randomly. The formula for expressing this is:

-log2p

The formula is in base 2, which means that the only digits possible for expressing any value are 0 and 1, (compared with the numbers 0 through 9 in base 10 that we more commonly use.) This is known as a binary code. (“Binary” means that something consists of only two things, in this case 0 or 1.) Since computers store information by turning huge numbers of tiny switches on or off, using this binary code of 0s or 1s corresponds very well with switches being turned on or off within the memory of the machine. The total number of these 0s and 1s and the number of on off switches that are needed to correspond to them are known as “bits.” A “byte” is a sequence of binary digits made up of 8 bits.10 Therefore, a “kilobyte” that contains 1 kilobyte (Kb) of information consumes approximately11 8,000 on/off switches in the memory of the machine, etc.12

An information source, let’s say a human mind, wants to communicate some information. It sends a message through a phone receiver that then enters into the phone system. As it passes through the system, noise, distortion, and static are inevitably introduced that subsequently degrade the quality of the transmitted message. Eventually, the signal is received by another phone or device which then “converts” or otherwise carries the message to its ultimate destination, which in this example is another human mind. Information theory can quantify (as probability) the information sent, the amount of noise introduced into the system, etc., to ensure that the highest quality message arrives at its destination.

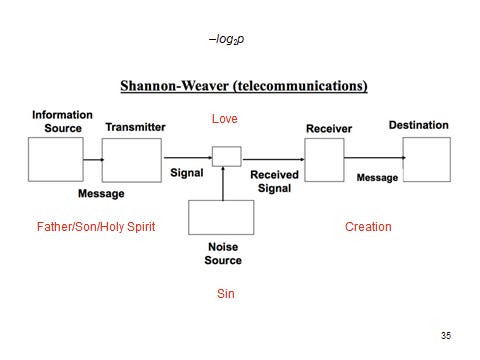

It is fascinating that the scheme for the modern idea of “Shannon Information” and communications corresponds very closely with the scheme of the Trinity and its relationship to Creation. Since it appears that at the most basic level of reality what exists is a divine Mind and the information that it produces, it shouldn’t be surprising that the Trinity’s interactions with Creation follow the Shannon Information scheme exactly. The Message originates in the mind of the Father who is the information source, which is then transmitted via the Son and the Holy Spirit to Receivers in Creation, in particular human minds. However, when sin occurs, noise is introduced into the transmission of the message which causes distortion and a loss of information before it reaches the receiver in Creation. Sin was considered by the Church Fathers to be the loss of the existence of the good, just as noise or distortion in a communicated message represents loss of the existence of information in the message. Once again, we have a fundamental modern scientific concept revealing something about the God who created everything and in whom everything exists.

“John Archibald Wheeler,” Wikipedia, accessed March 13, 2025, https://en.wikipedia.org/wiki/John_Archibald_Wheeler#Participatory_Anthropic_Principle.

“Rob Sheldon weighs in on the fundamental building blocks of nature – particles, fields, or …” Uncommon Descent, February 8, 2021, https://uncommondescent.com/intelligent-design/rob-sheldon-weighs-in-on-the-fundamental-building-blocks-of-nature-particles-fields-or/

“John Archibald Wheeler Postulates ‘It from Bit’” History of Information.com, accessed September 24, 2021, https://historyofinformation.com/detail.php?id=5041.

“It From Bit: What Did John Archibald Wheeler Get Right—and Wrong?” Mind Matters, May 20, 2021, https://mindmatters.ai/2021/05/it-from-bit-what-did-john-archibald-wheeler-get-right-and-wrong/.

A good example of this is the Powerball lottery. The odds of the reader personally winning the grand prize are effectively 0%, though the odds of someone, somewhere winning are 100% since there are so many innumerates buying tickets and the drawings continue until there is a winner. It is true that “you can’t win if you don’t play;” however, it is equally true that you can’t lose if you don’t play.

The only answer that avoids fallacies like eternal regressions, etc. is that it is the mind of God.

"Claude Shannon,” Wikipedia, accessed March 14, 2025, https://en.wikipedia.org/wiki/Claude_Shannon.

C. E. Shannon, "A mathematical theory of communication," in The Bell System Technical Journal, vol. 27, no. 4, pp. 623-656, Oct. 1948, doi: 10.1002/j.1538-7305.1948.tb00917.x.

“Information Theory,” Wikipedia, accessed August 12, 2020, https://en.wikipedia.org/wiki/Information_theory.

“Bits and Bytes,” accessed March 15, 2025, https://web.stanford.edu/class/cs101/bits-bytes.html.

Actually, it’s 1024 bytes per kilobyte for various reasons that are not important here, but the reader will get the idea.

“Byte” and “bit” are related terms from classical computing. "Qubit” is a term from the rapidly emerging field of quantum computing that takes advantage of the fact that in quantum mechanics all possible states coexist simultaneously until they are resolved, thus exponentially increasing the rate of computation compared with classical computers. However, the information theory scheme that they are working in is the same.

“Quantum Computing,” Wikipedia, accessed March 15, 2025, https://en.wikipedia.org/wiki/Quantum_computing.